publications

publications in reversed chronological order.

2025

-

How Compositional Generalization and Creativity Improve as Diffusion Models are TrainedIn 42nd International Conference on Machine Learning (ICML), 2025

How Compositional Generalization and Creativity Improve as Diffusion Models are TrainedIn 42nd International Conference on Machine Learning (ICML), 2025 -

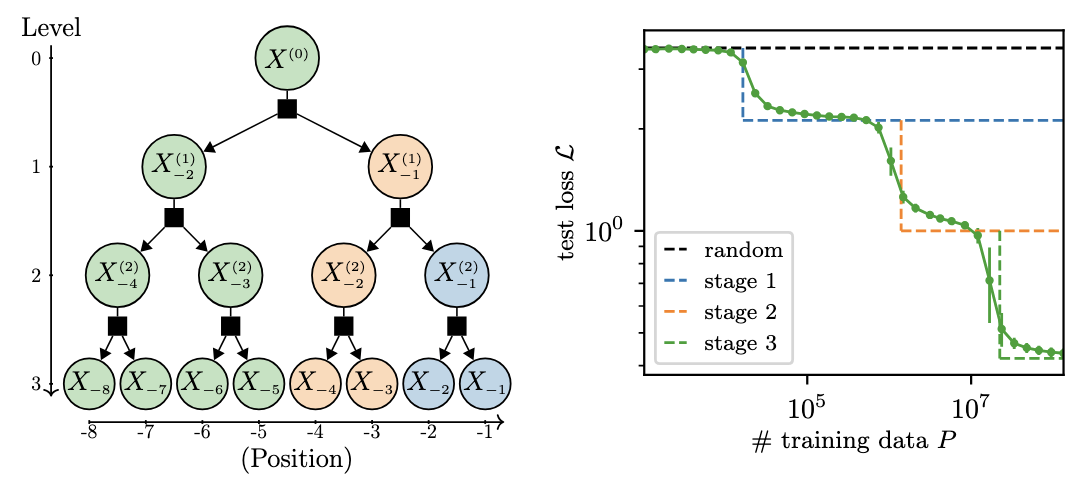

Scaling Laws and Representation Learning in Simple Hierarchical Languages: Transformers vs. Convolutional ArchitecturesarXiv preprint, 2025

Scaling Laws and Representation Learning in Simple Hierarchical Languages: Transformers vs. Convolutional ArchitecturesarXiv preprint, 2025 -

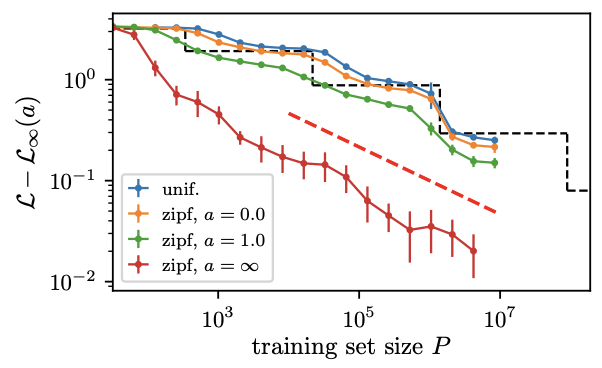

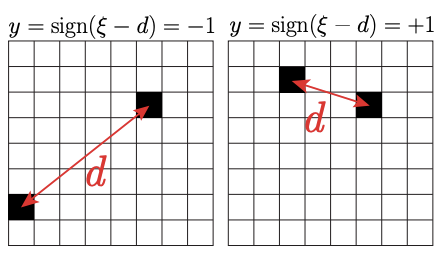

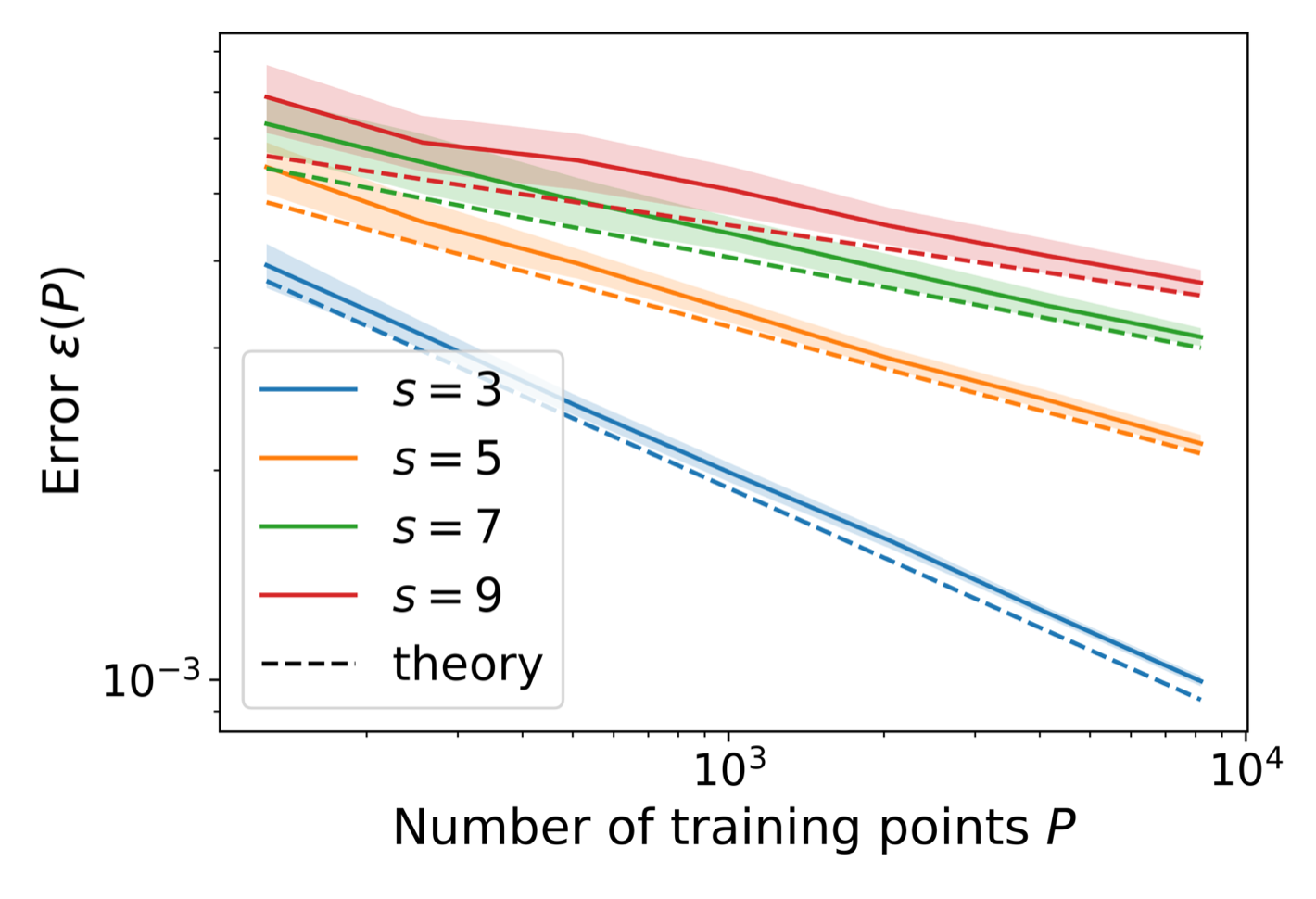

Learning curves theory for hierarchically compositional data with power-law distributed featuresIn 42nd International Conference on Machine Learning (ICML), 2025

Learning curves theory for hierarchically compositional data with power-law distributed featuresIn 42nd International Conference on Machine Learning (ICML), 2025

2024

-

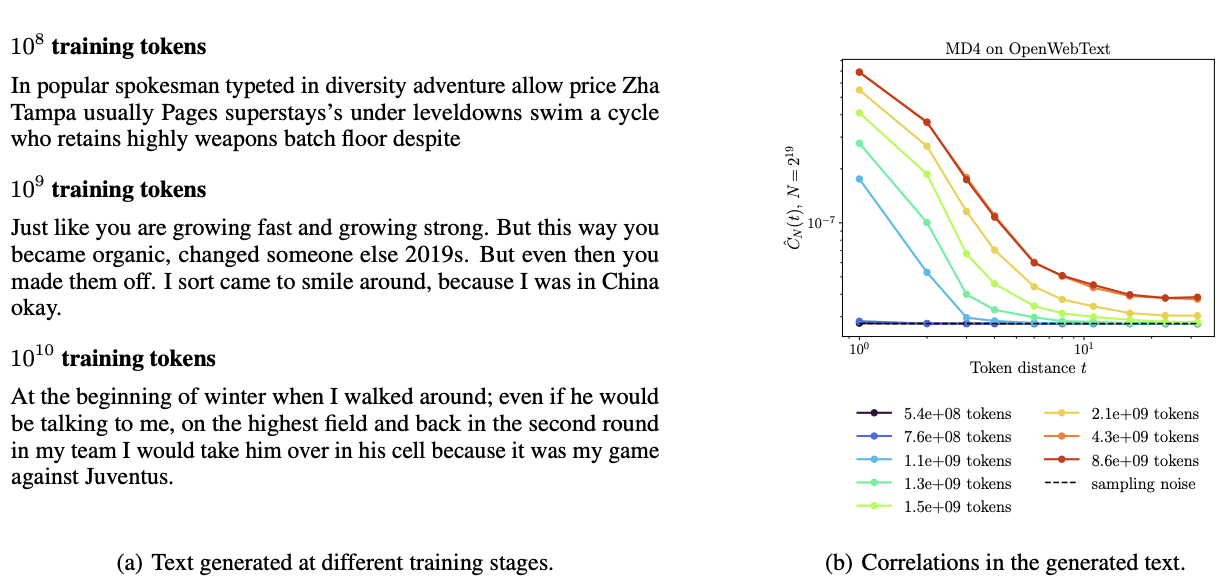

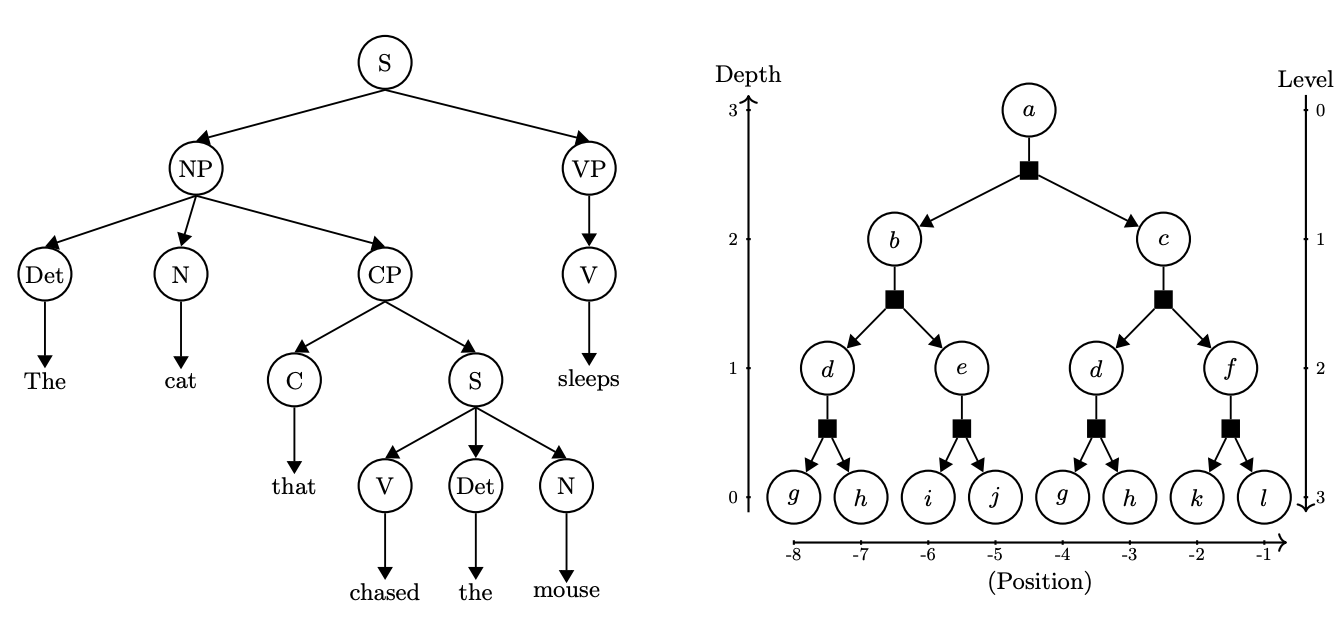

Towards a theory of how the structure of language is acquired by deep neural networksIn Advances in Neural Information Processing Systems (NeurIPS) 37, 2024

Towards a theory of how the structure of language is acquired by deep neural networksIn Advances in Neural Information Processing Systems (NeurIPS) 37, 2024 -

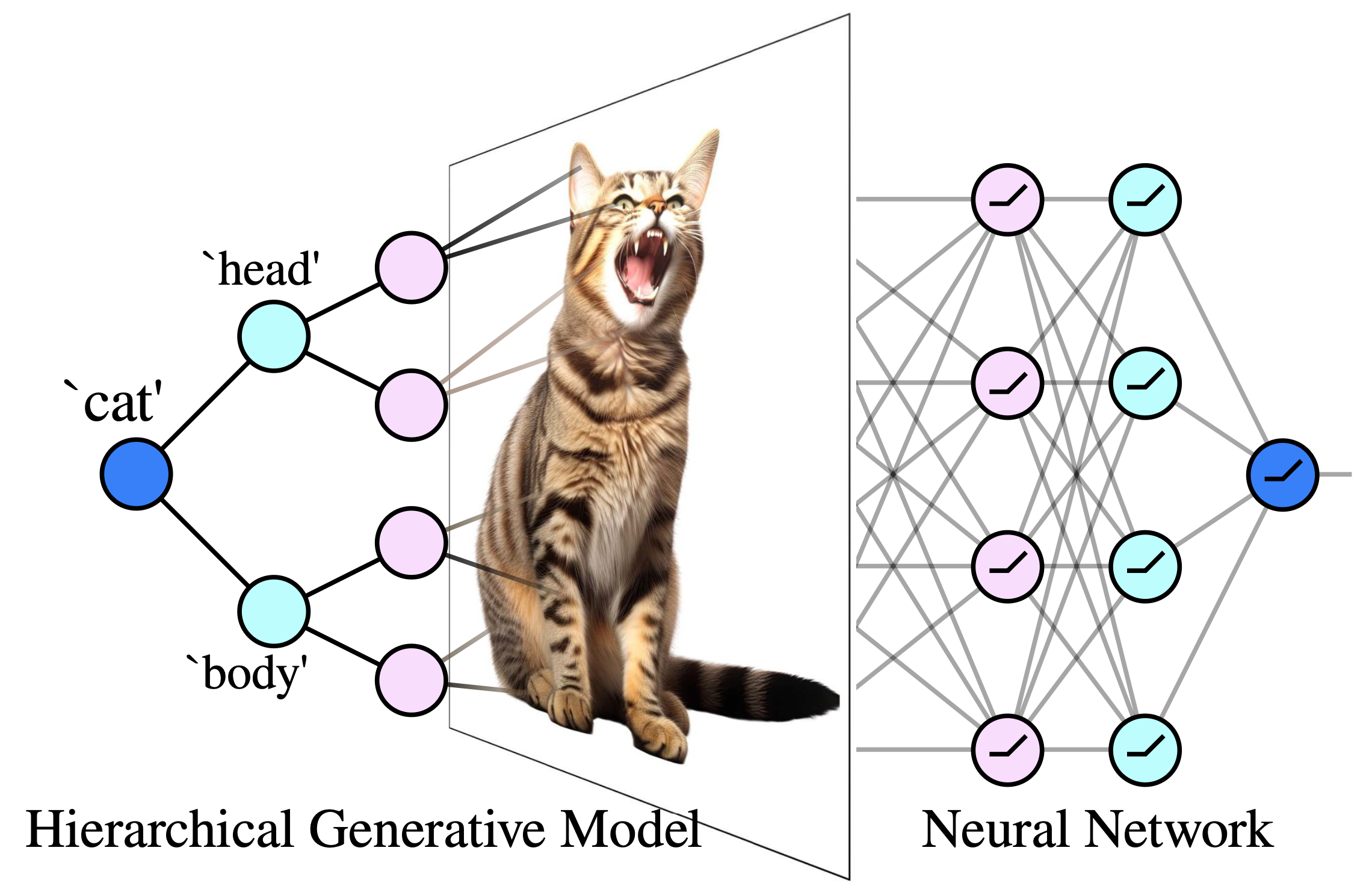

How Deep Neural Networks Learn Compositional Data: The Random Hierarchy ModelPhys. Rev. X, 2024

How Deep Neural Networks Learn Compositional Data: The Random Hierarchy ModelPhys. Rev. X, 2024 -

2023

-

How deep convolutional neural networks lose spatial information with trainingMach. learn.: sci. technol., 2023

How deep convolutional neural networks lose spatial information with trainingMach. learn.: sci. technol., 2023 -

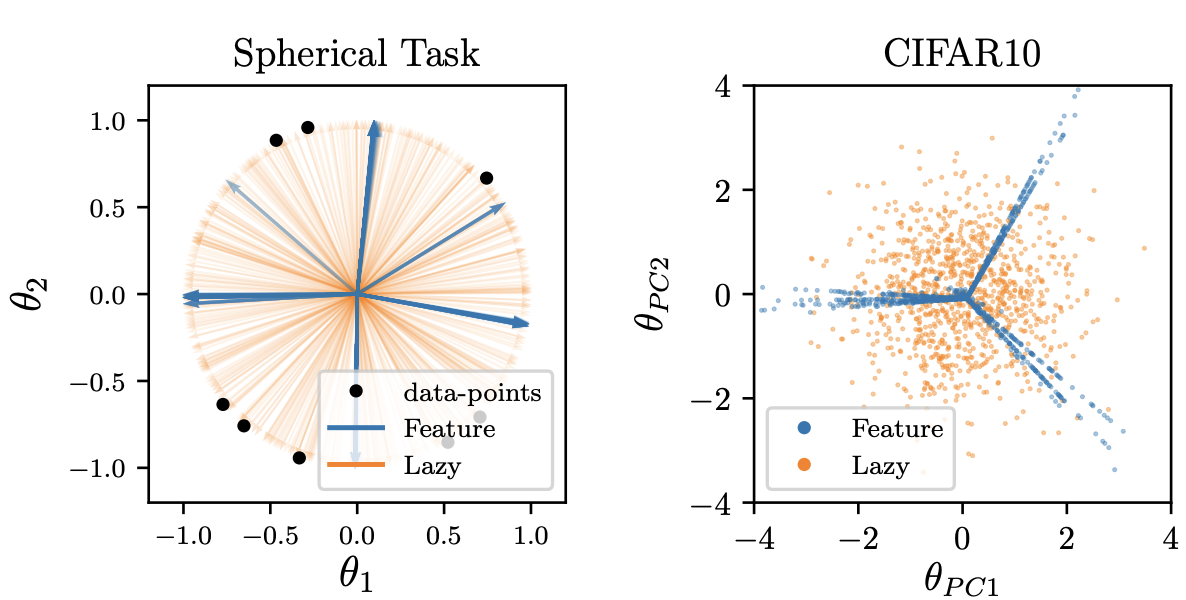

What Can Be Learnt With Wide Convolutional Neural Networks?In 40th International Conference on Machine Learning (ICML), 2023

What Can Be Learnt With Wide Convolutional Neural Networks?In 40th International Conference on Machine Learning (ICML), 2023

2022

-

Learning sparse features can lead to overfitting in neural networksIn Advances in Neural Information Processing Systems (NeurIPS) 35, 2022

Learning sparse features can lead to overfitting in neural networksIn Advances in Neural Information Processing Systems (NeurIPS) 35, 2022

2021

-

Locality defeats the curse of dimensionality in convolutional teacher-student scenariosIn Advances in Neural Information Processing Systems (NeurIPS) 34, 2021

Locality defeats the curse of dimensionality in convolutional teacher-student scenariosIn Advances in Neural Information Processing Systems (NeurIPS) 34, 2021